Graphics – concept and reality

From way back, this has been one of the main corners of the enthusiast industry given the popularity of games and rampaging, cut-throat industry model that has lead to exponential growth that has massively outpaced Moore's Law.But with genuine innovation comes the marketing fluff we have to dive through, and every so often the same problems crop up again and again. As much as I was a Voodoo fanboy back in the day, I may have cried just a little when it died on its ass (partly) because of its multi-GPU efforts. The Rage Fury Maxx that popped up at the same time was just as bad, but at least ATI lived to tell the tale.

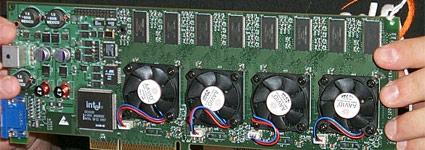

Now, very few seem to have learnt the lesson and subsequently every multi-GPU card to date hasn't exactly been a success: the Gigabyte 3D1 consisting of two GeForce 6800 GTs, Sapphire's dual Radeon X1950 card and even Nvidia's GeForce 7950 GX2 (which was ever-so-loosely called a "single" card) were all let downs and had limitations.

The only working "success" so far has been the Radeon HD 3870 X2 – a genuine example of a decent product that generally works and doesn't cost you a kidney and a limb. The only downside is that it relies on multi-GPU drivers being up to date with the game release calendar and it’s really the wrong time of year to judge that, but we're still concerned about its long-term prospects – will we end up with another GeForce 7950 GX2 situation, where drivers just aren't good enough?

And while we’re on the topic of multi-GPU, we may as well talk about its expansion into multi-multi-GPU technology. Who actually wants three or four cards in a system? We even heard a hint of six not long ago. Where next: eight, twenty, eighty? Again described as 'enthusiast' (we shudder), is it really worth developing on such niche technology? It costs driver teams time and significant effort, rather than just investing in getting a better product out for everyone? From what we’ve been told, these drivers have millions and millions of lines of code – couldn't the money be better spent at optimisation, bug fixing, profile updates and so on? How do these extremely niche products affect the bottom line, and thus the cost of the other products we pay for?

And while we’re on the topic of multi-GPU, we may as well talk about its expansion into multi-multi-GPU technology. Who actually wants three or four cards in a system? We even heard a hint of six not long ago. Where next: eight, twenty, eighty? Again described as 'enthusiast' (we shudder), is it really worth developing on such niche technology? It costs driver teams time and significant effort, rather than just investing in getting a better product out for everyone? From what we’ve been told, these drivers have millions and millions of lines of code – couldn't the money be better spent at optimisation, bug fixing, profile updates and so on? How do these extremely niche products affect the bottom line, and thus the cost of the other products we pay for?Most people will be more interested in single card performance, because it opens up the use of every motherboard – not one tied to a specific chipset. In this respect, recent mainstream products have certainly been excellent and for a change we have many, very able single slot products, but Tim has already aired his concerns about that.

Enthusiast: Defined

The bottom line is all about how the enthusiast is defined. To hardware companies, they'd like to think we are one of two distinct types: the people that have so much disposable income they will always get the latest, bleeding edge parts regardless of cost, and take the accumulated benefit of it all to a slightly better system than anyone else. Well, for three to six months.Every company that makes an ‘enthusiast’ product only really wants to know this type of people because it’s how they make their margins. Think of 3-way SLI, the 8800 Ultra, CrossFireX, Intel Extreme Edition processors and just about any ‘gamer’ motherboard you can think of.

The greater majority of us are enthusiasts for an entirely different reason: cost efficiency, value and a love for tinkering with a product to get the most out of it. We are the type of people that want to get the most out of the least we can get away with – this is where overclocking a £750 system to make it equivalent to something that retails at £1,500 seems like a sound investment. This is where our history lies before all these companies jumped in on the ‘enthusiast/gamer’ bandwagon, only to re-spin it in shiny housing and flooding it in blue LEDs. It's then pimped out as having bigger numbers than the next guy (euphemisms encouraged).

A snapshot in time: the top five threads under hardware in our own forums – four of which are people asking how to get biggest benefit and most cost efficient upgrade

We've got to stop the rot of this ‘gaming-enthusiast-platform’, stop being massaged into a corner – no matter how good it might feel. The opportunity to do something homebrew will be diminished to the point where only a handful of the most hardcore electrical engineers with oscilloscopes, thick glasses and degree plagues mounted on the wall will be able to do cool new things.

In some ways I fear for our creed because I'm afraid there the little tangible benefit to future ‘features’ hyped up to the max by marketers: PhysX and Killer NIC immediately come to mind. I just hope the press, companies and the community can understand the differences between real enthusiasts and those who just want to update their 3DMark score with an injection of e-peen.

What we, as members of the press, need to ensure is that our reviews of products don't purely become a list of features and buzz words, and instead talk about its relevance to the actual computing experience. There will always be a market for the latest and greatest, and yes, we always love to know about it even if we can't afford it, but let’s not get coaxed into going down that a path of no return that restricts our future choices.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.